很多深度神经网络模型需要加载预训练过的Vgg参数,比如说:风格迁移、目标检测、图像标注等计算机视觉中常见的任务。那么到底如何加载Vgg模型呢?Vgg文件的参数到底有何意义呢?加载后的模型该如何使用呢?

本文将以Vgg19为例子,详细说明Tensorflow如何加载Vgg预训练模型。

实验环境

GTX1050-ti, cuda9.0

Window10, Tensorflow 1.12

展示Vgg19构造

import tensorflow as tf

import numpy as np

import scipy.io

data_path = 'model/vgg19.mat' # data_path指下载下来的Vgg19预训练模型的文件地址

# 读取Vgg19文件

data = scipy.io.loadmat(data_path)

# 打印Vgg19的数据类型及其组成

print("type: ", type(data))

print("data.keys: ", data.keys())

# 得到对应卷积核的矩阵

weights = data['layers'][0]

# 定义Vgg19的组成

layers = (

'conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1',

'conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2',

'conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3',

'relu3_3', 'conv3_4', 'relu3_4', 'pool3',

'conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3',

'relu4_3', 'conv4_4', 'relu4_4', 'pool4',

'conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3',

'relu5_3', 'conv5_4', 'relu5_4'

)

# 打印Vgg19不同卷积层所对应的维度

for i, name in enumerate(layers):

kind = name[:4]

if kind == 'conv':

print("%s: %s" % (name, weights[i][0][0][2][0][0].shape))

elif kind == 'relu':

print(name)

elif kind == 'pool':

print(name)

代码输出结果如下:

type: <class 'dict'>

data.keys: dict_keys(['__header__', '__version__', '__globals__', 'layers', 'meta'])

conv1_1: (3, 3, 3, 64)

relu1_1

conv1_2: (3, 3, 64, 64)

relu1_2

pool1

conv2_1: (3, 3, 64, 128)

relu2_1

conv2_2: (3, 3, 128, 128)

relu2_2

pool2

conv3_1: (3, 3, 128, 256)

relu3_1

conv3_2: (3, 3, 256, 256)

relu3_2

conv3_3: (3, 3, 256, 256)

relu3_3

conv3_4: (3, 3, 256, 256)

relu3_4

pool3

conv4_1: (3, 3, 256, 512)

relu4_1

conv4_2: (3, 3, 512, 512)

relu4_2

conv4_3: (3, 3, 512, 512)

relu4_3

conv4_4: (3, 3, 512, 512)

relu4_4

pool4

conv5_1: (3, 3, 512, 512)

relu5_1

conv5_2: (3, 3, 512, 512)

relu5_2

conv5_3: (3, 3, 512, 512)

relu5_3

conv5_4: (3, 3, 512, 512)

relu5_4

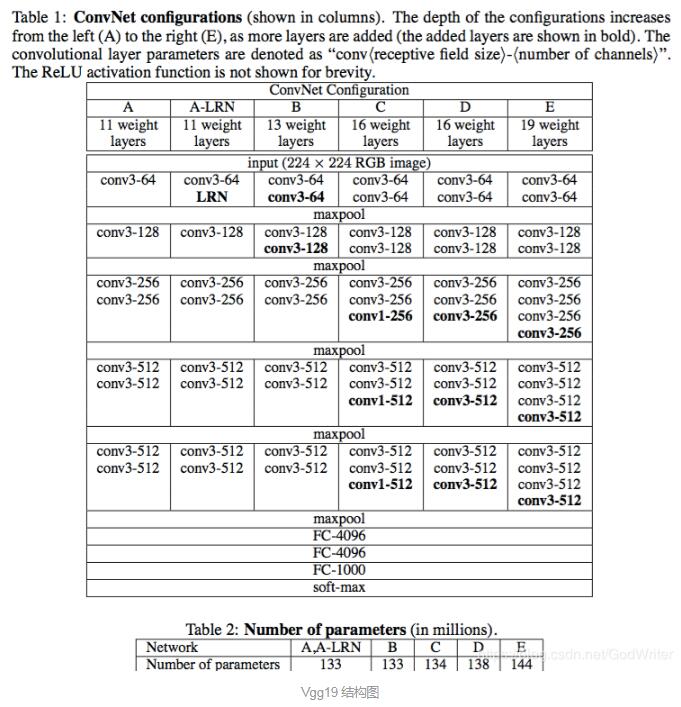

那么Vgg19真实的网络结构是怎么样子的呢,如下图所示:

在本文,主要讨论卷积模块,大家通过对比可以发现,我们打印出来的Vgg19结构及其卷积核的构造的确如论文中给出的Vgg19结构一致。

构建Vgg19模型

def _conv_layer(input, weights, bias):

conv = tf.nn.conv2d(input, tf.constant(weights), strides=(1, 1, 1, 1),

padding='SAME')

return tf.nn.bias_add(conv, bias)

def _pool_layer(input):

return tf.nn.max_pool(input, ksize=(1, 2, 2, 1), strides=(1, 2, 2, 1),

padding='SAME')

class VGG19:

layers = (

'conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1',

'conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2',

'conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3',

'relu3_3', 'conv3_4', 'relu3_4', 'pool3',

'conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3',

'relu4_3', 'conv4_4', 'relu4_4', 'pool4',

'conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3',

'relu5_3', 'conv5_4', 'relu5_4'

)

def __init__(self, data_path):

data = scipy.io.loadmat(data_path)

self.weights = data['layers'][0]

def feed_forward(self, input_image, scope=None):

# 定义net用来保存模型每一步输出的特征图

net = {}

current = input_image

with tf.variable_scope(scope):

for i, name in enumerate(self.layers):

kind = name[:4]

if kind == 'conv':

kernels = self.weights[i][0][0][2][0][0]

bias = self.weights[i][0][0][2][0][1]

kernels = np.transpose(kernels, (1, 0, 2, 3))

bias = bias.reshape(-1)

current = _conv_layer(current, kernels, bias)

elif kind == 'relu':

current = tf.nn.relu(current)

elif kind == 'pool':

current = _pool_layer(current)

# 在每一步都保存当前输出的特征图

net[name] = current

return net

在上面的代码中,我们定义了一个Vgg19的类别专门用来加载Vgg19模型,并且将每一层卷积得到的特征图保存到net中,最后返回这个net,用于代码后续的处理。

测试Vgg19模型

在给出Vgg19的构造模型后,我们下一步就是如何用它,我们的思路如下:

加载本地图片

定义Vgg19模型,传入本地图片

得到返回每一层的特征图

image_path = "data/test.jpg" # 本地的测试图片

image_raw = tf.gfile.GFile(image_path, 'rb').read()

# 一定要tf.float(),否则会报错

image_decoded = tf.to_float(tf.image.decode_jpeg(image_raw))

# 扩展图片的维度,从三维变成四维,符合Vgg19的输入接口

image_expand_dim = tf.expand_dims(image_decoded, 0)

# 定义Vgg19模型

vgg19 = VGG19(data_path)

net = vgg19.feed_forward(image_expand_dim, 'vgg19')

print(net)

代码结果如下所示:

{'conv1_1': <tf.Tensor 'vgg19_1/BiasAdd:0' shape=(1, ?, ?, 64) dtype=float32>,

'relu1_1': <tf.Tensor 'vgg19_1/Relu:0' shape=(1, ?, ?, 64) dtype=float32>,

'conv1_2': <tf.Tensor 'vgg19_1/BiasAdd_1:0' shape=(1, ?, ?, 64) dtype=float32>,

'relu1_2': <tf.Tensor 'vgg19_1/Relu_1:0' shape=(1, ?, ?, 64) dtype=float32>,

'pool1': <tf.Tensor 'vgg19_1/MaxPool:0' shape=(1, ?, ?, 64) dtype=float32>,

'conv2_1': <tf.Tensor 'vgg19_1/BiasAdd_2:0' shape=(1, ?, ?, 128) dtype=float32>,

'relu2_1': <tf.Tensor 'vgg19_1/Relu_2:0' shape=(1, ?, ?, 128) dtype=float32>,

'conv2_2': <tf.Tensor 'vgg19_1/BiasAdd_3:0' shape=(1, ?, ?, 128) dtype=float32>,

'relu2_2': <tf.Tensor 'vgg19_1/Relu_3:0' shape=(1, ?, ?, 128) dtype=float32>,

'pool2': <tf.Tensor 'vgg19_1/MaxPool_1:0' shape=(1, ?, ?, 128) dtype=float32>,

'conv3_1': <tf.Tensor 'vgg19_1/BiasAdd_4:0' shape=(1, ?, ?, 256) dtype=float32>,

'relu3_1': <tf.Tensor 'vgg19_1/Relu_4:0' shape=(1, ?, ?, 256) dtype=float32>,

'conv3_2': <tf.Tensor 'vgg19_1/BiasAdd_5:0' shape=(1, ?, ?, 256) dtype=float32>,

'relu3_2': <tf.Tensor 'vgg19_1/Relu_5:0' shape=(1, ?, ?, 256) dtype=float32>,

'conv3_3': <tf.Tensor 'vgg19_1/BiasAdd_6:0' shape=(1, ?, ?, 256) dtype=float32>,

'relu3_3': <tf.Tensor 'vgg19_1/Relu_6:0' shape=(1, ?, ?, 256) dtype=float32>,

'conv3_4': <tf.Tensor 'vgg19_1/BiasAdd_7:0' shape=(1, ?, ?, 256) dtype=float32>,

'relu3_4': <tf.Tensor 'vgg19_1/Relu_7:0' shape=(1, ?, ?, 256) dtype=float32>,

'pool3': <tf.Tensor 'vgg19_1/MaxPool_2:0' shape=(1, ?, ?, 256) dtype=float32>,

'conv4_1': <tf.Tensor 'vgg19_1/BiasAdd_8:0' shape=(1, ?, ?, 512) dtype=float32>,

'relu4_1': <tf.Tensor 'vgg19_1/Relu_8:0' shape=(1, ?, ?, 512) dtype=float32>,

'conv4_2': <tf.Tensor 'vgg19_1/BiasAdd_9:0' shape=(1, ?, ?, 512) dtype=float32>,

'relu4_2': <tf.Tensor 'vgg19_1/Relu_9:0' shape=(1, ?, ?, 512) dtype=float32>,

'conv4_3': <tf.Tensor 'vgg19_1/BiasAdd_10:0' shape=(1, ?, ?, 512) dtype=float32>,

'relu4_3': <tf.Tensor 'vgg19_1/Relu_10:0' shape=(1, ?, ?, 512) dtype=float32>,

'conv4_4': <tf.Tensor 'vgg19_1/BiasAdd_11:0' shape=(1, ?, ?, 512) dtype=float32>,

'relu4_4': <tf.Tensor 'vgg19_1/Relu_11:0' shape=(1, ?, ?, 512) dtype=float32>,

'pool4': <tf.Tensor 'vgg19_1/MaxPool_3:0' shape=(1, ?, ?, 512) dtype=float32>,

'conv5_1': <tf.Tensor 'vgg19_1/BiasAdd_12:0' shape=(1, ?, ?, 512) dtype=float32>,

'relu5_1': <tf.Tensor 'vgg19_1/Relu_12:0' shape=(1, ?, ?, 512) dtype=float32>,

'conv5_2': <tf.Tensor 'vgg19_1/BiasAdd_13:0' shape=(1, ?, ?, 512) dtype=float32>,

'relu5_2': <tf.Tensor 'vgg19_1/Relu_13:0' shape=(1, ?, ?, 512) dtype=float32>,

'conv5_3': <tf.Tensor 'vgg19_1/BiasAdd_14:0' shape=(1, ?, ?, 512) dtype=float32>,

'relu5_3': <tf.Tensor 'vgg19_1/Relu_14:0' shape=(1, ?, ?, 512) dtype=float32>,

'conv5_4': <tf.Tensor 'vgg19_1/BiasAdd_15:0' shape=(1, ?, ?, 512) dtype=float32>,

'relu5_4': <tf.Tensor 'vgg19_1/Relu_15:0' shape=(1, ?, ?, 512) dtype=float32>}

本文提供的测试代码是完成正确的,已经避免了很多使用Vgg19预训练模型的坑操作,比如:给图片添加维度,转换读取图片的的格式等,为什么这么做的详细原因可参考我的另一篇博客:Tensorflow加载Vgg预训练模型的几个注意事项。

到这里,如何使用tensorflow读取Vgg19模型结束了,若是大家有其他疑惑,可在评论区留言,会定时回答。

好了,以上就是小编分享给大家的全部内容了,希望能给大家一个参考,也希望大家多多支持自学编程网。

- 本文固定链接: https://zxbcw.cn/post/187351/

- 转载请注明:必须在正文中标注并保留原文链接

- QQ群: PHP高手阵营官方总群(344148542)

- QQ群: Yii2.0开发(304864863)