分类:PyTorch

在利用torch.max函数和F.Ssoftmax函数时,对应该设置什么维度,总是有点懵,遂总结一下:首先看看二维tensor的函数的例子:importtorchimporttorch.nn.functionalasFinput=torch.randn(3,4)print(input)tensor([[-0.5526,-0.0194,2.1469,-0.2567],[-0.3337,-0.9229,0.0376,-0.0801],[1.4721,0.1181,-2.6214,1.7721]])b=F.softmax(input,dim=0)#按列SoftMax,列和为1print(b)tensor([[0.1018,0.3918,...

继续阅读 >

在利用torch.max函数和F.Ssoftmax函数时,对应该设置什么维度,总是有点懵,遂总结一下:首先看看二维tensor的函数的例子:importtorchimporttorch.nn.functionalasFinput=torch.randn(3,4)print(input)tensor([[-0.5526,-0.0194,2.1469,-0.2567],[-0.3337,-0.9229,0.0376,-0.0801],[1.4721,0.1181,-2.6214,1.7721]])b=F.softmax(input,dim=0)#按列SoftMax,列和为1print(b)tensor([[0.1018,0.3918,...

继续阅读 >

我就废话不多说了,大家还是直接看代码吧~importtorch.nnasnnimporttorch.nn.functionalasFimporttorch.nnasnnclassAlexNet_1(nn.Module):def__init__(self,num_classes=n):super(AlexNet,self).__init__()self.features=nn.Sequential(nn.Conv2d(3,64,kernel_size=3,stride=2,padding=1),nn.BatchNorm2d(64),nn.ReLU(inplace=True),)defforward(self,x):x=self...

继续阅读 >

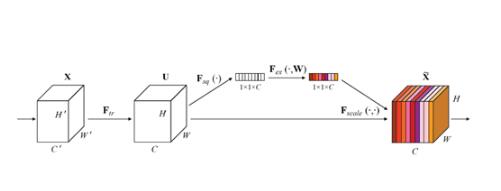

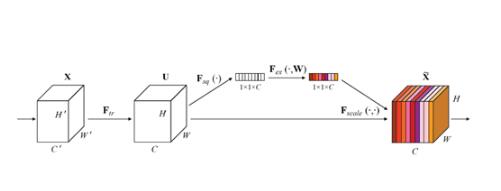

我就废话不多说了,大家还是直接看代码吧~fromtorchimportnnclassSELayer(nn.Module):def__init__(self,channel,reduction=16):super(SELayer,self).__init__()//返回1X1大小的特征图,通道数不变self.avg_pool=nn.AdaptiveAvgPool2d(1)self.fc=nn.Sequential(nn.Linear(channel,channel//reduction,bias=False),nn.ReLU(inplace=True),nn.Linear(channel//reduction,channel,bias=False),...

继续阅读 >

我就废话不多说了,大家还是直接看代码吧~fromtorchimportnnclassSELayer(nn.Module):def__init__(self,channel,reduction=16):super(SELayer,self).__init__()//返回1X1大小的特征图,通道数不变self.avg_pool=nn.AdaptiveAvgPool2d(1)self.fc=nn.Sequential(nn.Linear(channel,channel//reduction,bias=False),nn.ReLU(inplace=True),nn.Linear(channel//reduction,channel,bias=False),...

继续阅读 >

我就废话不多说了,大家还是直接看代码吧~importtorchimporttorch.nnasnnimporttorch.nn.functionalasFclassVGG16(nn.Module):def__init__(self):super(VGG16,self).__init__()#3*224*224self.conv1_1=nn.Conv2d(3,64,3)#64*222*222self.conv1_2=nn.Conv2d(64,64,3,padding=(1,1))#64*222*222self.maxpool1=nn.MaxPool2d((2,2),padding=(1,1))#pooling64*112*112...

继续阅读 >

我就废话不多说了,大家还是直接看代码吧~importtorchimporttorch.nnasnnimporttorch.nn.functionalasFclassVGG16(nn.Module):def__init__(self):super(VGG16,self).__init__()#3*224*224self.conv1_1=nn.Conv2d(3,64,3)#64*222*222self.conv1_2=nn.Conv2d(64,64,3,padding=(1,1))#64*222*222self.maxpool1=nn.MaxPool2d((2,2),padding=(1,1))#pooling64*112*112...

继续阅读 >

2020

10-08

2020

10-08

在利用torch.max函数和F.Ssoftmax函数时,对应该设置什么维度,总是有点懵,遂总结一下:首先看看二维tensor的函数的例子:importtorchimporttorch.nn.functionalasFinput=torch.randn(3,4)print(input)tensor([[-0.5526,-0.0194,2.1469,-0.2567],[-0.3337,-0.9229,0.0376,-0.0801],[1.4721,0.1181,-2.6214,1.7721]])b=F.softmax(input,dim=0)#按列SoftMax,列和为1print(b)tensor([[0.1018,0.3918,...

继续阅读 >

在利用torch.max函数和F.Ssoftmax函数时,对应该设置什么维度,总是有点懵,遂总结一下:首先看看二维tensor的函数的例子:importtorchimporttorch.nn.functionalasFinput=torch.randn(3,4)print(input)tensor([[-0.5526,-0.0194,2.1469,-0.2567],[-0.3337,-0.9229,0.0376,-0.0801],[1.4721,0.1181,-2.6214,1.7721]])b=F.softmax(input,dim=0)#按列SoftMax,列和为1print(b)tensor([[0.1018,0.3918,...

继续阅读 >

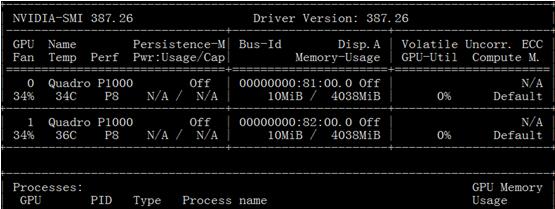

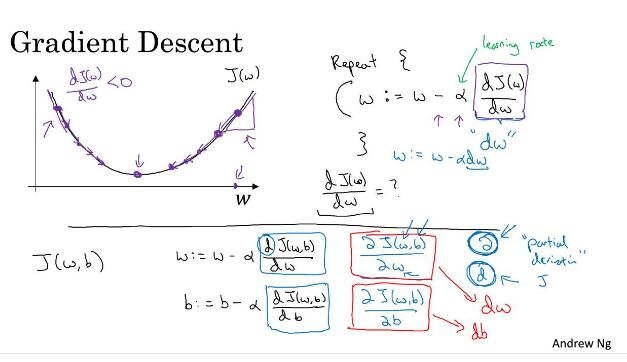

前言深度学习涉及很多向量或多矩阵运算,如矩阵相乘、矩阵相加、矩阵-向量乘法等。深层模型的算法,如BP,Auto-Encoder,CNN等,都可以写成矩阵运算的形式,无须写成循环运算。然而,在单核CPU上执行时,矩阵运算会被展开成循环的形式,本质上还是串行执行。GPU(GraphicProcessUnits,图形处理器)的众核体系结构包含几千个流处理器,可将矩阵运算并行化执行,大幅缩短计算时间。随着NVIDIA、AMD等公司不断推进其GPU的大规模并...

前言深度学习涉及很多向量或多矩阵运算,如矩阵相乘、矩阵相加、矩阵-向量乘法等。深层模型的算法,如BP,Auto-Encoder,CNN等,都可以写成矩阵运算的形式,无须写成循环运算。然而,在单核CPU上执行时,矩阵运算会被展开成循环的形式,本质上还是串行执行。GPU(GraphicProcessUnits,图形处理器)的众核体系结构包含几千个流处理器,可将矩阵运算并行化执行,大幅缩短计算时间。随着NVIDIA、AMD等公司不断推进其GPU的大规模并...

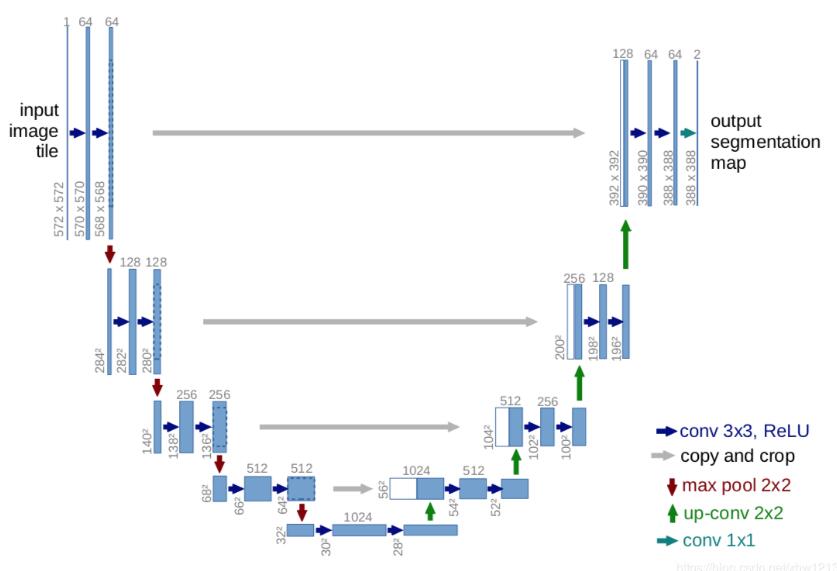

设计神经网络的一般步骤:1.设计框架2.设计骨干网络Unet网络设计的步骤:1.设计Unet网络工厂模式2.设计编解码结构3.设计卷积模块4.unet实例模块Unet网络最重要的特征:1.编解码结构。2.解码结构,比FCN更加完善,采用连接方式。3.本质是一个框架,编码部分可以使用很多图像分类网络。示例代码:importtorchimporttorch.nnasnnclassUnet(nn.Module):#初始化参数:Encoder,Decoder,bridge#bridge默认值为无,如果有...

设计神经网络的一般步骤:1.设计框架2.设计骨干网络Unet网络设计的步骤:1.设计Unet网络工厂模式2.设计编解码结构3.设计卷积模块4.unet实例模块Unet网络最重要的特征:1.编解码结构。2.解码结构,比FCN更加完善,采用连接方式。3.本质是一个框架,编码部分可以使用很多图像分类网络。示例代码:importtorchimporttorch.nnasnnclassUnet(nn.Module):#初始化参数:Encoder,Decoder,bridge#bridge默认值为无,如果有...

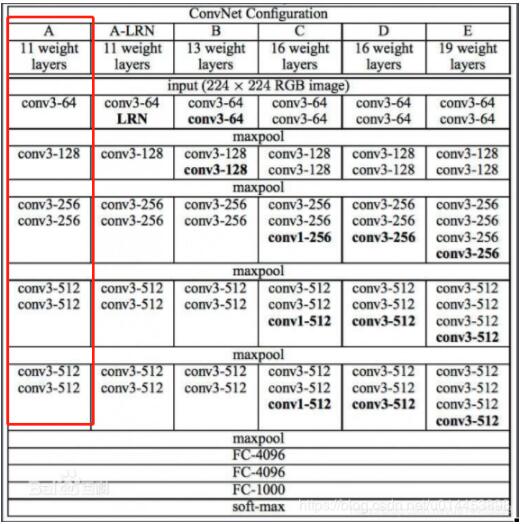

首先这是VGG的结构图,VGG11则是红色框里的结构,共分五个block,如红框中的VGG11第一个block就是一个conv3-64卷积层:一,写VGG代码时,首先定义一个vgg_block(n,in,out)方法,用来构建VGG中每个block中的卷积核和池化层:n是这个block中卷积层的数目,in是输入的通道数,out是输出的通道数有了block以后,我们还需要一个方法把形成的block叠在一起,我们定义这个方法叫vgg_stack:defvgg_stack(num_convs,channels):#vgg_ne...

首先这是VGG的结构图,VGG11则是红色框里的结构,共分五个block,如红框中的VGG11第一个block就是一个conv3-64卷积层:一,写VGG代码时,首先定义一个vgg_block(n,in,out)方法,用来构建VGG中每个block中的卷积核和池化层:n是这个block中卷积层的数目,in是输入的通道数,out是输出的通道数有了block以后,我们还需要一个方法把形成的block叠在一起,我们定义这个方法叫vgg_stack:defvgg_stack(num_convs,channels):#vgg_ne...

我就废话不多说了,大家还是直接看代码吧~fromtorchimportnnclassSELayer(nn.Module):def__init__(self,channel,reduction=16):super(SELayer,self).__init__()//返回1X1大小的特征图,通道数不变self.avg_pool=nn.AdaptiveAvgPool2d(1)self.fc=nn.Sequential(nn.Linear(channel,channel//reduction,bias=False),nn.ReLU(inplace=True),nn.Linear(channel//reduction,channel,bias=False),...

我就废话不多说了,大家还是直接看代码吧~fromtorchimportnnclassSELayer(nn.Module):def__init__(self,channel,reduction=16):super(SELayer,self).__init__()//返回1X1大小的特征图,通道数不变self.avg_pool=nn.AdaptiveAvgPool2d(1)self.fc=nn.Sequential(nn.Linear(channel,channel//reduction,bias=False),nn.ReLU(inplace=True),nn.Linear(channel//reduction,channel,bias=False),...

我就废话不多说了,大家还是直接看代码吧~importtorchimporttorch.nnasnnimporttorch.nn.functionalasFclassVGG16(nn.Module):def__init__(self):super(VGG16,self).__init__()#3*224*224self.conv1_1=nn.Conv2d(3,64,3)#64*222*222self.conv1_2=nn.Conv2d(64,64,3,padding=(1,1))#64*222*222self.maxpool1=nn.MaxPool2d((2,2),padding=(1,1))#pooling64*112*112...

我就废话不多说了,大家还是直接看代码吧~importtorchimporttorch.nnasnnimporttorch.nn.functionalasFclassVGG16(nn.Module):def__init__(self):super(VGG16,self).__init__()#3*224*224self.conv1_1=nn.Conv2d(3,64,3)#64*222*222self.conv1_2=nn.Conv2d(64,64,3,padding=(1,1))#64*222*222self.maxpool1=nn.MaxPool2d((2,2),padding=(1,1))#pooling64*112*112...

DataLoaderDataset不能满足需求需自定义继承torch.utils.data.Dataset时需要override__init__,__getitem__,__len__,否则DataLoader导入自定义Dataset时缺少上述函数会导致NotImplementedError错误Numpy广播机制:让所有输入数组都向其中shape最长的数组看齐,shape中不足的部分都通过在前面加1补齐输出数组的shape是输入数组shape的各个轴上的最大值如果输入数组的某个轴和输出数组的对应轴的长度相同或者其长度为1时,这个...

DataLoaderDataset不能满足需求需自定义继承torch.utils.data.Dataset时需要override__init__,__getitem__,__len__,否则DataLoader导入自定义Dataset时缺少上述函数会导致NotImplementedError错误Numpy广播机制:让所有输入数组都向其中shape最长的数组看齐,shape中不足的部分都通过在前面加1补齐输出数组的shape是输入数组shape的各个轴上的最大值如果输入数组的某个轴和输出数组的对应轴的长度相同或者其长度为1时,这个...

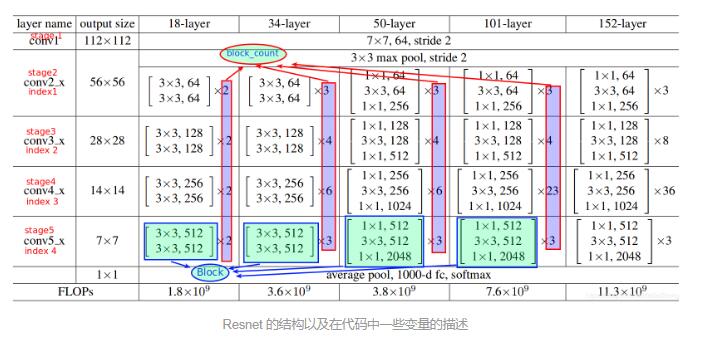

在这篇文章中,我们将讨论maskR-CNN背后的一些理论,以及如何在PyTorch中使用预训练的maskR-CNN模型。1.语义分割、目标检测和实例分割之前已经介绍过:1、语义分割:在语义分割中,我们分配一个类标签(例如。狗、猫、人、背景等)对图像中的每个像素。2、目标检测:在目标检测中,我们将类标签分配给包含对象的包围框。一个非常自然的想法是把两者结合起来。我们只想在一个对象周围识别一个包围框,并且找到包围框中的哪些像素...

在这篇文章中,我们将讨论maskR-CNN背后的一些理论,以及如何在PyTorch中使用预训练的maskR-CNN模型。1.语义分割、目标检测和实例分割之前已经介绍过:1、语义分割:在语义分割中,我们分配一个类标签(例如。狗、猫、人、背景等)对图像中的每个像素。2、目标检测:在目标检测中,我们将类标签分配给包含对象的包围框。一个非常自然的想法是把两者结合起来。我们只想在一个对象周围识别一个包围框,并且找到包围框中的哪些像素...

废话不多说,直接上代码吧~model.zero_grad()optimizer.zero_grad()首先,这两种方式都是把模型中参数的梯度设为0当optimizer=optim.Optimizer(net.parameters())时,二者等效,其中Optimizer可以是Adam、SGD等优化器defzero_grad(self):"""Setsgradientsofallmodelparameterstozero."""forpinself.parameters():ifp.gradisnotNone:p.grad.data.zero_()补充知识:Pytorch中的optimizer.zero_grad和loss和ne...

废话不多说,直接上代码吧~model.zero_grad()optimizer.zero_grad()首先,这两种方式都是把模型中参数的梯度设为0当optimizer=optim.Optimizer(net.parameters())时,二者等效,其中Optimizer可以是Adam、SGD等优化器defzero_grad(self):"""Setsgradientsofallmodelparameterstozero."""forpinself.parameters():ifp.gradisnotNone:p.grad.data.zero_()补充知识:Pytorch中的optimizer.zero_grad和loss和ne...